Hi there 👋 This week, we dive into UC Berkeley Assistant Professor Aditi Krishnapriyan’s fascinating work on scaling machine learning (ML) methods for scientific research. Aditi presented her research at an AI for Science event co-organized recently by Alan Gardner and yours truly, with the MIT Club of Northern California.

In her talk, “Developing Machine Learning Methods at Scale for Chemistry, Materials, and Climate Problems,” Aditi Krishnapriyan, Assistant Professor at UC Berkeley in Chemical Engineering and EECS, outlined her group’s dual focus:

1. Developing fundamental ML models for chemistry and materials problems

2. Using scientific insights to create new ML methods that don’t yet exist

According to Aditi, at the core of chemical and materials research is the potential energy surface – a representation of the energy landscape that governs molecular interactions and reactions. This surface is critical for understanding processes such as how molecules bind to a protein.

However, calculating these surfaces via quantum mechanics is computationally expensive and doesn’t scale well. Experimental methods are often prohibitive. ML has become a powerful surrogate, offering faster alternatives to these resource-intensive simulations.

Aditi posed a key question: How do we develop ML methods that generalize across applications while leveraging modern computing capabilities? Many systems studied in science, such as molecules and climate models, can be represented as graphs. Inspired by the now landmark paper “Attention Is All You Need,” Aditi and her team explored how attention mechanisms could be applied to graph-based systems.

Aditi and her team developed a new graph attention mechanism for molecular representations, achieving top performance across benchmarks in catalysis, materials, and molecular applications. Remarkably, they were the only academic team to outperform industry giants like Meta and Microsoft on datasets like Open Catalyst. Aditi sees this as a sign of increasing democratization in the AI for Science field, bolstered by open science collaboration. To support this, her team made all their code and models publicly available.

Why This Matters

According to Aditi, one impactful application of these ML models is in identifying transition states, which represent the lowest energy pathways between two molecular states. Understanding these pathways is crucial in many areas, including rechargeable batteries. Transition states are key to processes like electrolyte decomposition, which directly affects energy density, lifespan, and safety in batteries.

Despite these successes, challenges remain. For example, while amusing failures in text-generation AI can be fixed easily, scientific ML models demand greater reliability due to the critical nature of their applications. Aditi’s team is addressing this by exploring multi-modal training strategies that incorporate experimental data alongside simulations, yielding promising results.

Additionally, scientists often prefer faster, specialized models for specific applications (e.g., amino acids or perovskites), driven by GPU scarcity and their domain-specific needs. To address this, Aditi’s team is working on distilling large models into smaller, more efficient versions tailored for specific use cases.

For Aditi’s full talk, and additional insights into cutting-edge AI for chemistry and materials research from experts Wen Jie Ong (NVIDIA), Arash Khajeh (Toyota Research Institute), and Aldair Gongora (Lawrence Livermore National Lab), check out our full recording:

OpenAI, Sanofi, and Formation Bio announce Muse, an AI-powered tool designed to improve drug development by speeding up clinical trial recruitment.

Google.org announces $20 million fund to support AI for scientific breakthroughs. Press release calls out fields such as rare and neglected disease research, experimental biology, materials science, and sustainability.

AI drug discovery company Recursion completes its acquisition of Exscientia, moving Recursion toward more vertically-integrated and technology-enabled drug discovery.

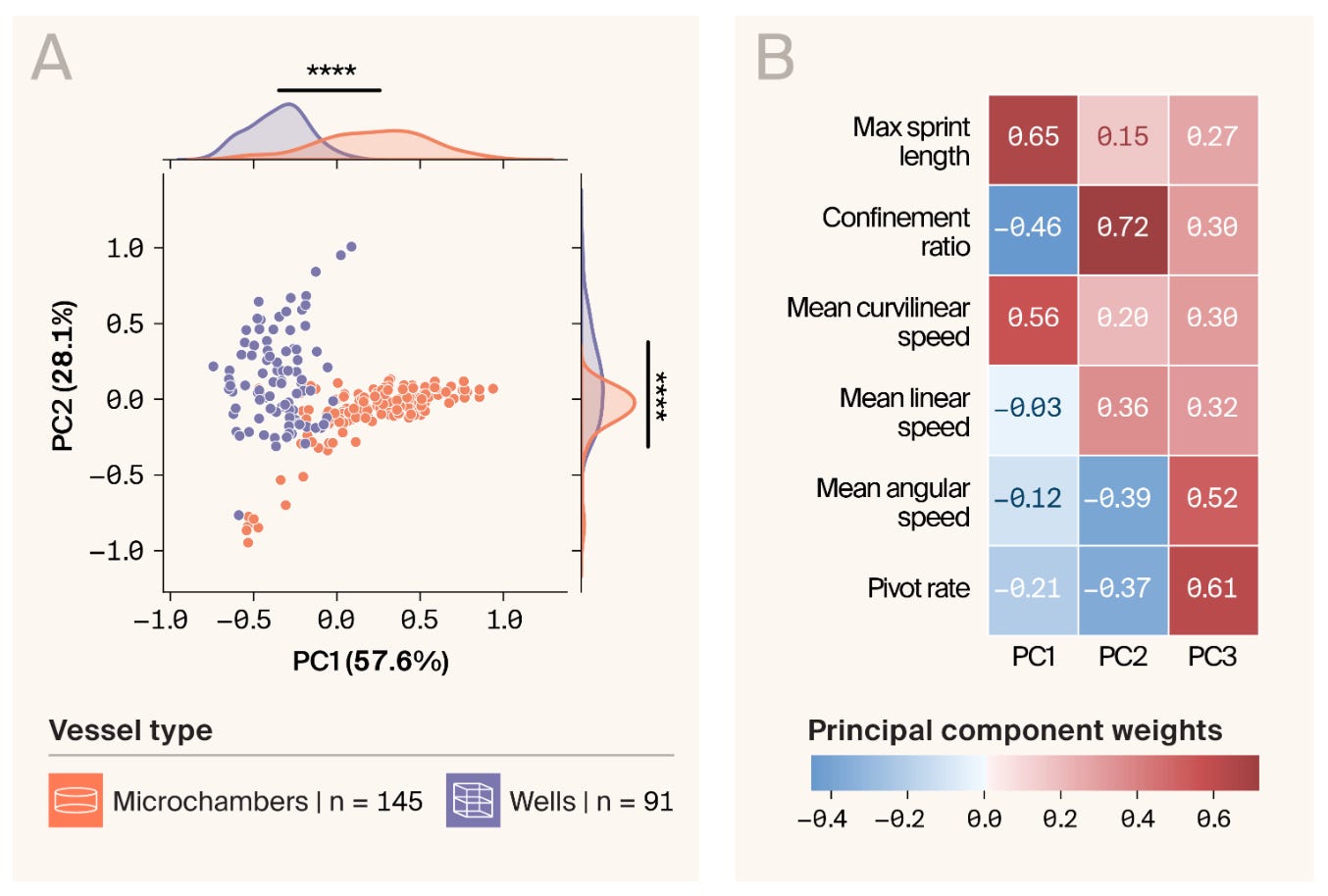

Arcadia Science publishes SwimTracker, a high-throughput imaging approach to track and quantify single-cell swimming.

Venture studio Flagship Pioneering seeks a Director of Product to “set the roadmap for the autonomous materials lab of the future and collaborate across disciplines.”

UCSF’s Hsiung Lab seeks multiple postdocs to contribute to their research in synthetic gene regulation, combinatorial genetics, and tissue biology.

DeepMind spin-off Isomorphic Labs seeks candidates for multiple open roles to meet their hiring team at NeurIPS.

Vancouver (NeurIPS Conference), Dec 11: “Breaking Silos” social, an open community gathering for AI x Science, where they will explore how open and collaborative AI is transforming scientific discovery.

SF Bay Area, Dec 11: AI + Health Demo Night, exploring where ML models are finding their way into clinical workflows, biosensors, and healthtech.

SF Bay Area, Dec 12: Laboratory Robotics Interest Group (LRIG) End of Year Event, featuring networking and technical presentations by Octant.

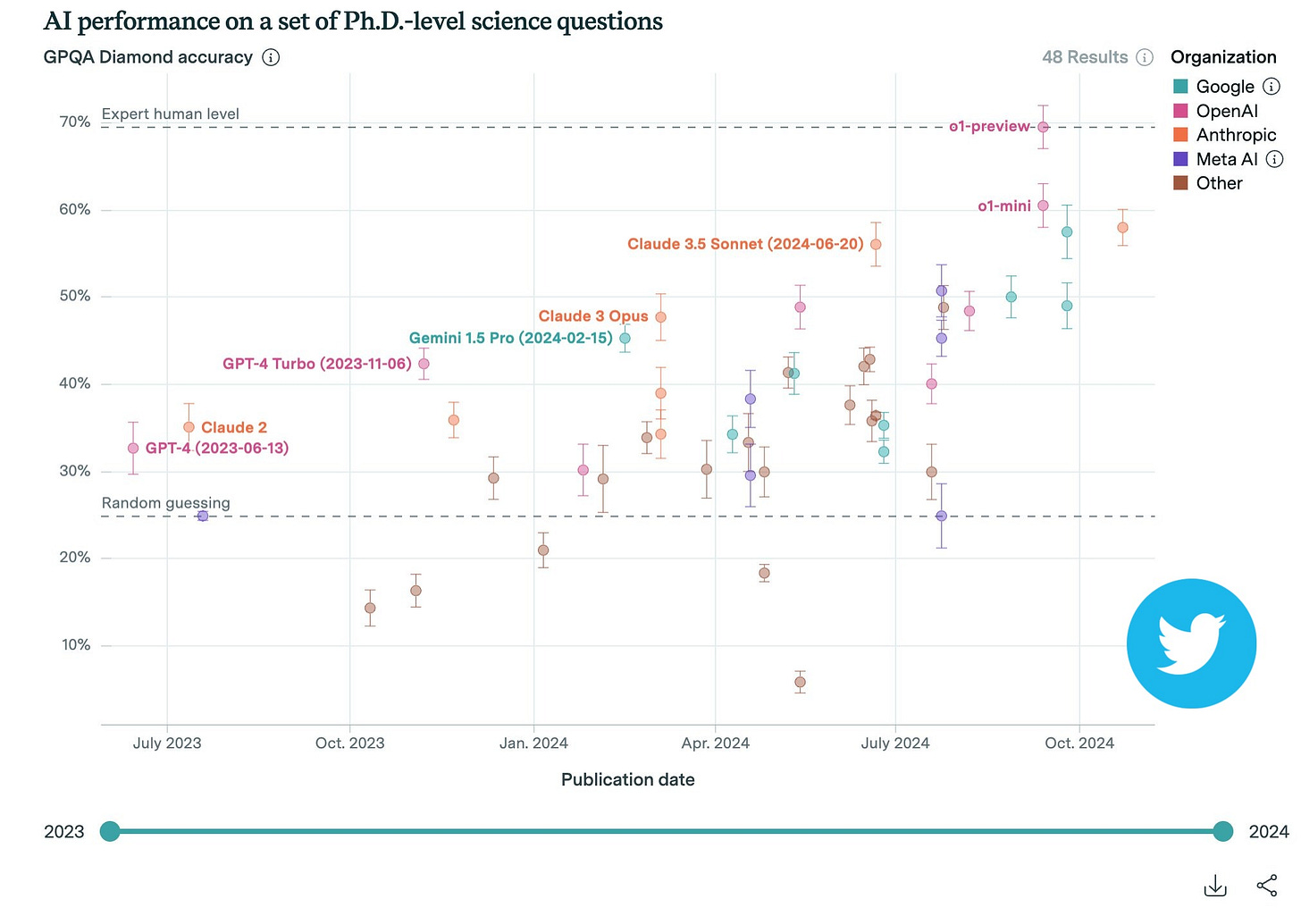

How are LLMs doing at answering PhD-level science questions? A fun graph courtesy of physicist and cosmologist Anthony Aguirre.

Find this newsletter valuable? Subscribe for regular insights, and share it with friends who are passionate about AI for Science!

RLHF! Like a good neural network, this newsletter is only as effective as the input we receive from you. We’d love your feedback—drop us a note on how we can make it better for you.

Catch you on the next one! – Nabil